Linking the regional climate-ecology attribution chain in the western United States

Many are obviously curious about whether certain current regional environmental changes are traceable to global climate change. There are a number of large-scale changes that clearly qualify—rapid warming of the arctic/sub-arctic regions for example, and earlier spring onset in the northern hemisphere and the associated phenological changes in plants and animals. But as one moves to smaller scales of space or time, global-to-local connections become more difficult to establish. This is due to the combined effect of the resolutions of climate models, the intrinsic variability of the system and the empirical climatic, environmental, or ecological data—the signal to noise ratio of possible causes and observed effects. Thus recent work by ecologists, climate scientists, and hydrologists in the western United States relating global climate change, regional climate change, and regional ecological change is of great significance. Together, their results show an increasing ability to link the chain at smaller and presumably more viscerally meaningful and politically tractable scales.

For instance, a couple of weeks ago, a paper in Science by Phil van Mantgem of the USGS, and others, showed that over the last few decades, background levels of tree mortality have been increasing in undisturbed old-growth forests in the western United States, without the accompanying increase in tree “recruitment” (new trees) that would balance the ledger over time. Background mortality is the regular ongoing process of tree death, un-related to the more visible, catastrophic mortality caused by such events as fires, insect attacks, and windstorms, and typically is less than 1% per year. It is that portion of tree death due to the direct and indirect effects of tree competition, climate (often manifest as water stress), and old age. Because many things can affect background mortality, van Mantgem et. al. were very careful to minimize the potential for other possible explanatory variables via their selection of study sites, while still maintaining a relatively long record over a wide geographic area. These other possible causes include, especially, increases in crowding (density; a notorious confounding factor arising from previous disturbances and/or fire suppression), and edge effects (trees close to an

For instance, a couple of weeks ago, a paper in Science by Phil van Mantgem of the USGS, and others, showed that over the last few decades, background levels of tree mortality have been increasing in undisturbed old-growth forests in the western United States, without the accompanying increase in tree “recruitment” (new trees) that would balance the ledger over time. Background mortality is the regular ongoing process of tree death, un-related to the more visible, catastrophic mortality caused by such events as fires, insect attacks, and windstorms, and typically is less than 1% per year. It is that portion of tree death due to the direct and indirect effects of tree competition, climate (often manifest as water stress), and old age. Because many things can affect background mortality, van Mantgem et. al. were very careful to minimize the potential for other possible explanatory variables via their selection of study sites, while still maintaining a relatively long record over a wide geographic area. These other possible causes include, especially, increases in crowding (density; a notorious confounding factor arising from previous disturbances and/or fire suppression), and edge effects (trees close to an

opening experience a generally warmer and drier micro-climate than those in the forest interior).

They found that in each of three regions, the Pacific Northwest, California, and the Interior West, mortality rates have doubled in 17 to 29 years (depending on location), and have been doing so across all dominant species, all size classes, and all elevations. The authors show with downscaled climate information that the increasing mortality rates likely corresponds to summer soil moisture stress increases over that time that are driven by increases in temperature with little or no change in precipitation in these regions. Fortunately, natural background mortality rates in western forests are typically less than 0.5% per year, so rate doublings over ~20-30 years, by themselves, will not have large immediate impacts. What the longer term changes will be is an open question however, depending on future climate and tree recruitment/mortality rates. Nevertheless, the authors have shown clearly that mortality rates have been increasing over the last ~30 years. Thus the $64,000 question: are these changes attributable in part or all to human-induced global warming?

Yes, argues a pair of December papers in the Journal of Climate, and a 2008 work in Science. The studies, by Bonfils et. al. (2008), Pierce et. al. (2008), and Barnett et. al. (2008), link observed western temperature and temperature-induced snowmelt processes to human-forced (greenhouse gases, ozone, and aerosols) global climate changes. The authors used various combinations of three GCMs, two statistical downscaling techniques (to account for micro-climate effects that aren't resolved in the GCMs), and a high resolution hydrology model to experiment with the various possible causes of the observed climatic changes and the robustness of the methods. The possible causes included the usual list of suspects: natural climatic variability, the human-induced forcings just mentioned, and non-human forcings (solar and volcanic). Climate models were chosen specifically for their ability to account for important, natural climatic fluctuations in the western US that influence temperature, precipitation and snowpack dynamics, particularly the Pacific Decadal Oscillation, and El Niño/La Niña oscillations, and/or their ability to generate the daily climatic values necessary for input to the hydrologic model. The relevant climate variables included various subsets of minimum and maximum daily temperatures from January to March (JFM), their corresponding monthly averages, degree days (days with mean T>0ºC), and the ratio of Snow Water Equivalent (SWE) to water year precipitation (P). In each case, multiple hundred year control runs were generated with two GCMs to isolate the natural variability, and then forced runs from previous model intercomparison projects were used to identify the impacts of the various forcings.

The results? The authors estimate that about 50% of the April 1 SWE equivalent, and 60% of river discharge date advances and January-to-March temperature increases, cannot be accounted for by either natural variability or non-human forcings. Bonfils et al also note that the decreases in SWE are due to January-to-March temperature increases, not winter precipitation decreases, as the observational record over the last several decades shows. The April snow is a key variable, for along with spring through early fall temperatures, it has a great bearing on growing season soil moisture status throughout the western United States, and thus directly on forest productivity and demographic processes.

Link o’ chain, meet link o’chain.

]]> So it is with the

So it is with the  Naturally, people are interested on what affect these corrections will have on the analysis of the Steig et al paper. But before we get to that, we can think about some '

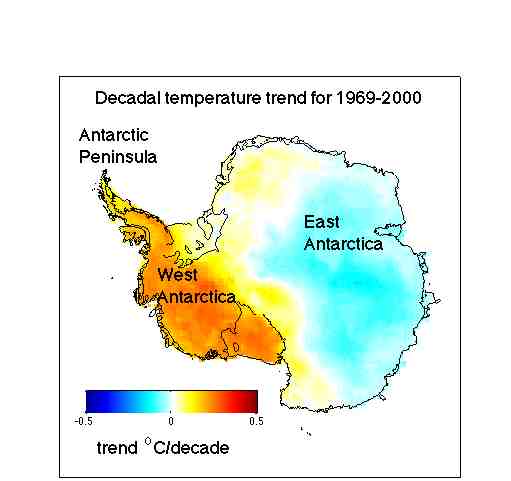

Naturally, people are interested on what affect these corrections will have on the analysis of the Steig et al paper. But before we get to that, we can think about some ' The trends in the AWS reconstruction in the paper are shown above. This is for the full period 1957-2006 and the dots are scaled a little smaller than they were in the paper for clarity. The biggest dot (on the Peninsula) represents about 0.5ºC/dec. The difference that you get if you use detrended data is shown next.

The trends in the AWS reconstruction in the paper are shown above. This is for the full period 1957-2006 and the dots are scaled a little smaller than they were in the paper for clarity. The biggest dot (on the Peninsula) represents about 0.5ºC/dec. The difference that you get if you use detrended data is shown next. Now that we know that the trend (and much of the data) at Harry was in fact erroneous, it's useful to see what happens when you don't use Harry at all. The differences with the original results (at each of the other points) are almost undetectable. (Same scale as immediately above; if the scale in the first figure were used, you couldn't see the dots at all!).

Now that we know that the trend (and much of the data) at Harry was in fact erroneous, it's useful to see what happens when you don't use Harry at all. The differences with the original results (at each of the other points) are almost undetectable. (Same scale as immediately above; if the scale in the first figure were used, you couldn't see the dots at all!).

In terms of mass balance two charts indicate the mean annual balance of the WGMS reporting glaciers and the mean cumulative balance of reporting glaciers with more than 30-years of record and of all reporting glaciers. The trends demonstrates why alpine glaciers are currently retreating, mass balances have been significantly and consistently negative. Mass balance is reported in water equivalent thickness changes. A loss of 0.9 m of water equivalent is the same as the loss of 1.0 m of glacier thickness, since ice is less dense than water. The cumulative loss of the last 30 years is the equivalent of cutting a thick slice off of the average glacier. The trend is remarkably consistent from region to region. The figure on the right is the annual glacier mass balance index from the WGMS (if this was business it would be bankrupt by now). The cumulative mass balance index, based on 30 glaciers with 30 years of record and for all glaciers is not appreciably different (the dashed line for subset of 30 reference glaciers, is because not all 30 glaciers have submitted final data for the last few years):

In terms of mass balance two charts indicate the mean annual balance of the WGMS reporting glaciers and the mean cumulative balance of reporting glaciers with more than 30-years of record and of all reporting glaciers. The trends demonstrates why alpine glaciers are currently retreating, mass balances have been significantly and consistently negative. Mass balance is reported in water equivalent thickness changes. A loss of 0.9 m of water equivalent is the same as the loss of 1.0 m of glacier thickness, since ice is less dense than water. The cumulative loss of the last 30 years is the equivalent of cutting a thick slice off of the average glacier. The trend is remarkably consistent from region to region. The figure on the right is the annual glacier mass balance index from the WGMS (if this was business it would be bankrupt by now). The cumulative mass balance index, based on 30 glaciers with 30 years of record and for all glaciers is not appreciably different (the dashed line for subset of 30 reference glaciers, is because not all 30 glaciers have submitted final data for the last few years):

The second parameter reported by WGMS is terminus behavior. The values are generally for glaciers examined annually (many additional glaciers are examined periodically). The population has an over-emphasis on glaciers from the European Alps, but the overall global and regional records are very similar, with the exception of New Zealand. The number of advancing versus retreating glaciers in the diagram below from the WGMS shows a 2005 minimum in the percentage of advancing glaciers in Europe, Asia and North America and Europe. In

The second parameter reported by WGMS is terminus behavior. The values are generally for glaciers examined annually (many additional glaciers are examined periodically). The population has an over-emphasis on glaciers from the European Alps, but the overall global and regional records are very similar, with the exception of New Zealand. The number of advancing versus retreating glaciers in the diagram below from the WGMS shows a 2005 minimum in the percentage of advancing glaciers in Europe, Asia and North America and Europe. In

The British tabloid Daily Mirror recently headlined that “Sea will rise 'to levels of last Ice Age'”. No doubt many of our readers will appreciate just how scary this prospect is: sea level during the last Ice Age was up to 120 meters lower than today. Our favourite swimming beaches – be it Coogee in Sydney or the Darß on the German Baltic coast – would then all be high and dry, and ports like Rotterdam or Tokyo would be far from the sea. Imagine it.

The British tabloid Daily Mirror recently headlined that “Sea will rise 'to levels of last Ice Age'”. No doubt many of our readers will appreciate just how scary this prospect is: sea level during the last Ice Age was up to 120 meters lower than today. Our favourite swimming beaches – be it Coogee in Sydney or the Darß on the German Baltic coast – would then all be high and dry, and ports like Rotterdam or Tokyo would be far from the sea. Imagine it.

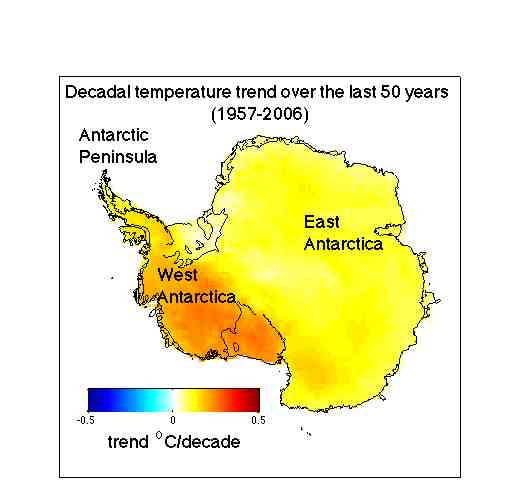

The paper shows that Antarctica has been warming for the last 50 years, and that it has been warming especially in West Antarctica (see the figure). The results are based on a statistical blending of satellite data and temperature data from weather stations. The results don't depend on the statistics alone. They are backed up by independent data from automatic weather stations, as shown in our paper as well as in updated work by Bromwich, Monaghan and others (see their AGU abstract,

The paper shows that Antarctica has been warming for the last 50 years, and that it has been warming especially in West Antarctica (see the figure). The results are based on a statistical blending of satellite data and temperature data from weather stations. The results don't depend on the statistics alone. They are backed up by independent data from automatic weather stations, as shown in our paper as well as in updated work by Bromwich, Monaghan and others (see their AGU abstract,