Since 2015 I was working on a GPU lightmapper called Bakery. It’s finally done, and you can even buy it on Unity’s Asset Store (it can be used outside of Unity as well, but the demand is higher there). Originally intended for my own game, I decided to make it a product by itself and hopefully help other people bake nice lighting. In my old tweet I promised to write about how it works, as there were many, MANY unexpected problems on the way, and I think such write-up would be useful. I also thought of open-sourcing it at the time, but having spent almost more than a year of work and coding it full-time now, I think it’s fair to delay it a bit.

The major focus of this project was to minimize any kinds of artifacts lightmapping often produces, like seams and leaks, and also make it flexible and fast. This blog post won’t cover lighting computation much, but will instead focus on what it takes to produce a high quality lightmap. We will start with picture on the left and will make it look like the one on the right:

Contents:

UV space rasterization

Optimizing UV GBuffer: shadow leaks

Optimizing UV GBuffer: shadow terminator

Ray bias

Fixing UV seams

Final touches

Bonus: mip-mapping lightmaps

UV space rasterization

Bakery is in fact a 4th lightmapper I designed. Somehow I’m obsessed with baking stuff. First one simply rasterized forward lights in UV space, 2nd generated UV surface position and normal and then rendered the scene from every texel to get GI (huge batches with instancing), 3rd was PlayCanvas’ runtime lightmapper, which is actually very similar to 1st. All of them had one thing in common – something had to be rasterized in UV space.

Let’s say, you have a simple lighting shader, and a mesh with lightmap UVs:

You want to bake this lighting, how do you that? Instead of outputting transformed vertex position

OUT.Position = mul(IN.Position, MVP);

you just output UVs straight on the screen:

OUT.Position = float4(IN.LightmapUV * 2 - 1, 0, 1);

Note that “*2-1” is necessary to transform from typical [0,1] UV space into typical [-1;1] clip space.

Voila:

That was easy, now let’s try to apply this texture:

Oh no, black seams everywhere!

Because of the bilinear interpolation and typical non-conservative rasterization, our texels now blend into background color. But if you ever worked with any baking software you know about padding, and how they expand or dilate pixels around to cover the background. So let’s try to process our lightmap with a very simple dilation shader. Here is one from PlayCanvas, in GLSL:

varying vec2 vUv0;

uniform sampler2D source;

uniform vec2 pixelOffset;

void main(void) {

vec4 c = texture2D(source, vUv0);

c = c.a>0.0? c : texture2D(source, vUv0 - pixelOffset);

c = c.a>0.0? c : texture2D(source, vUv0 + vec2(0, -pixelOffset.y));

c = c.a>0.0? c : texture2D(source, vUv0 + vec2(pixelOffset.x, -pixelOffset.y));

c = c.a>0.0? c : texture2D(source, vUv0 + vec2(-pixelOffset.x, 0));

c = c.a>0.0? c : texture2D(source, vUv0 + vec2(pixelOffset.x, 0));

c = c.a>0.0? c : texture2D(source, vUv0 + vec2(-pixelOffset.x, pixelOffset.y));

c = c.a>0.0? c : texture2D(source, vUv0 + vec2(0, pixelOffset.y));

c = c.a>0.0? c : texture2D(source, vUv0 + pixelOffset);

gl_FragColor = c;

}

For every empty background pixel, it simply looks at 8 neighbours around it and copies the first non-empty value value it finds. And the result:

There are still many imperfections, but it’s much better.

To generate more sophisticated lighting, with area shadows, sky occlusion, colored light bounces, etc, we’ll have to trace some rays. Although you can write a completely custom ray-tracing implementation using bare DirectX/WebGL/Vulkan/Whatever, there are already very efficient APIs to do that, such as OptiX, RadeonRays and DXR. They have a lot of similarities, so knowing one should give you an idea of how to operate the other: you define surfaces, build an acceleration structure and intersect rays against it. Note that none of the APIs generate lighting, but they only give you a very flexible way of fast ray-primitive intersection on the GPU, and there are potentially lots of different ways to (ab)use it. OptiX was the first of such kind, and that is why I chose it for Bakery, as there were no alternatives back in the day. Today it’s also unique for having an integrated denoiser. DXR can be used on both Nvidia/AMD (not sure about Intel), but it requires Win10. I don’t know much about RadeonRays, but it seems to be the most cross-platform one. Anyway, in this article I’m writing from OptiX/CUDA (ray-tracing) and DX11 (tools) perspective.

To trace rays from the surface we first need to acquire a list of sample points on it along with their normals. There are multiple ways to do that, for example in a recent OptiX tutorial it is suggested to randomly distribute points over triangles and then resample the result to vertices (or possibly, lightmap texels). I went with a more direct approach, by rendering what I call a UV GBuffer.

It is exactly what it sounds like – just a bunch of textures of the same size with rasterized surface attributes, most importantly position and normal:

Example of an UV GBuffer for a sphere. Left: position, center: normal, right: albedo.

Having rasterized position and normal allows us to run a ray generation program using texture dimensions with every thread spawning a ray (or multiple rays, or zero rays) at every texel. GBuffer position can be used as a starting point and normal will affect ray orientation.

“Ray generation program” is a term used in both OptiX and DXR – think of it as a compute shader with additional ray-tracing capabilities. They can create rays, trace them by executing and waiting for intersection/hit programs (also new shader types) and then obtain the result.

Full Bakery UV GBuffer consists of 6 textures: position, “smooth position”, normal, face normal, albedo and emissive. Alpha channels also contain interesting things like world-space texel size and triangle ID. I will cover them in more detail later.

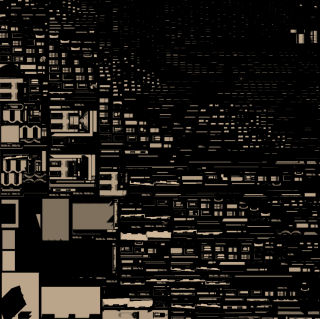

Calculating lighting for every GBuffer texel and dilating the result looks horrible, and there are many artifacts:

What have we done? Why is it so bad? Shadows leak, tiny details look like garbage, and smooth surfaces are lit like they are flat-shaded. I’ll go over each of these problems one by one.

Simply rasterizing the UV GBuffer is not enough. Even dilating it is not enough. UV layouts are often imperfect and can contain very small triangles that will be simply skipped or rendered with noticeable aliasing. If a triangle was too small to be drawn, and if you dilate nearby texels over its supposed place, you will get artifacts. This is what happens on the vertical bar of the window frame here.

Instead of post-dilation, we need to use or emulate conservative rasterization. Currently, not all GPUs support real conservative raster (hey, I’m not sponsored by Nvidia, just saying), but there are multiple ways to achieve similar results without it:

- Repurposing MSAA samples

- Rendering geometry multiple times with sub-pixel offset

- Rendering lines over triangles

Repurposing MSAA samples is a fun idea. Just render the UV layout with, say, 8x MSAA, then resolve it without any blur by either using any sample or somehow averaging them. It should give you more “conservative” results, but there is a problem. Unfortunately I don’t have a working code of this implementation anymore, but I remember it was pretty bad. Recall the pattern of 8x MSAA:

Because samples are scattered around, and none of them are in the center, and because we use them to calculate lightmap texel centers, there is a mismatch that produces even more shadow leaking.

Rendering lines is something I thought too late about, but it might work pretty well.

So in the end I decided to do multipass rendering with different sub-pixel offsets. To avoid aforementioned MSAA problems, we can have a centered sample with zero offset, always rendered last on top of everything else (or you can use depth/stencil buffer and render it first instead… but I was lazy to do so, and it’s not a perf-critical code). These are the offsets I use (to be multiplied by half-texel size):

float uvOffset[5 * 5 * 2] =

{

-2, -2,

2, -2,

-2, 2,

2, 2,

-1, -2,

1, -2,

-2, -1,

2, -1,

-2, 1,

2, 1,

-1, 2,

1, 2,

-2, 0,

2, 0,

0, -2,

0, 2,

-1, -1,

1, -1,

-1, 0,

1, 0,

-1, 1,

1, 1,

0, -1,

0, 1,

0, 0

};

Note how larger offsets are used first, then overdrawn by smaller offsets and finally the unmodifed UV GBuffer. It dilates the buffer, reconstructs tiny features and preserves sample centers in the majority of cases. This implementation is still limited, just as MSAA, by the amount of samples used, but in practice I found it handling most real life cases pretty well.

Here you can see a difference it makes. Artifacts present on thin geometry disappear:

Left: simple rasterization. Right: multi-tap rasterization.

Optimizing UV GBuffer: shadow leaks

To fix remaining artifacts, we will need to tweak GBuffer data. Let’s start with shadow leaks.

Shadow leaks occur because texels are large. We calculate lighting for a single point, but it gets interpolated over a much larger area. Once again, there are multiple ways to fix it. A popular approach is to supersample the lightmap, calculating multiple lighting values inside one texel area and then averaging. It is however quite slow and doesn’t completely solve the problem, only being able to lighten wrong shadows a bit.

To fix it I instead decided to push sample points out of shadowed areas where leaks can occur. Such spots can be detected and corrected using a simple algorithm:

- Trace at least 4 tangential rays pointing in different directions from texel center. Ray length = world-space texel size * 0.5.

- If ray hits a backface, this texel will leak.

- Push texel center outside using both hit face normal and ray direction.

This method will only fail when you have huge texels and thin double-sided walls, but this is rarely the case. Here is an illustration of how it works:

Here is a 4 rays loop. First ray that hits the backface (red dot) decides new sample position (blue dot) with the following formula:

newPos = oldPos + rayDir * hitDistance + hitFaceNormal * bias

Note that in this case 2 rays hit the backface, so potentially new sample position can randomly change based on the order of ray hits, but in practice it doesn’t matter. Bias values is an interesting topic by itself and I will cover it later.

I calculate tangential ray directions using a simple cross product with normal (face normal, not the interpolated one), which is not completely correct. Ideally you’d want to use actual surface tangent/binormal based on the lightmap UV direction, but it would require even more data to be stored in the GBuffer. Having rays not aligned to UV direction can produce some undershoots and overshoots:

In the end I simply chose to stay with a small overshoot.

Why should we even care about ray distance, what happens if it’s unlimited? Consider this case:

Only 2 shortest rays will properly push the sample out, giving it similar color to its surroundings. Attempting to push it behind more distant faces with leave the texel incorrectly shadowed.

As mentioned, we need world-space texel size in the UV GBuffer to control ray distance. A handy and cheap way to obtain a sufficiently accurate value is:

float3 dUV1 = max(abs(ddx(IN.worldPos)), abs(ddy(IN.worldPos))); float dPos = max(max(dUV1.x, dUV1.y), dUV1.z); dPos = dPos * sqrt(2.0); // convert to diagonal (small overshoot)

Calling ddx/ddy during UV GBuffer rasterization will answer the question “how much this value changes in one lightmap texel horizontally/vertically”, and plugging in world position basically gives us texel size. I’m simply taking the maximum here which is not entirely accurate. Ideally you may want 2 separate values for non-square texels, but it’s not common for lightmaps, as all automatic unwrappers try to avoid heavy distortion.

Adjusting sample positions is a massive improvement:

And another comparison, before and after:

Optimizing UV GBuffer: shadow terminator

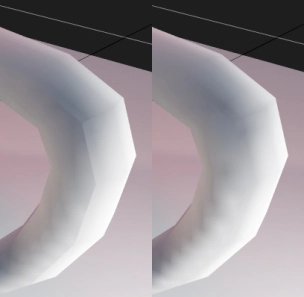

Next thing to address is the wrong self-shadowing of smooth low-poly surfaces. This problem is fairly common in ray-tracing in general and often mentioned as the “shadow terminator problem” (google it). Because rays are traced against actual geometry, they perceive it as faceted, giving faceted shadows. When combined with typical smooth interpolated normals, it looks wrong. “Smooth normals” is a hack everyone was using since the dawn of times, and to make ray-tracing practical we have to support it.

There is not much literature on that, but so far these are the methods I found being used:

- Adding constant bias to ray start (used in many offline renderers)

- Making shadow rays ignore adjacent faces that are almost coplanar (used in 3dsmax in “Advanced Ray-Traced Shadow” mode)

- Tessellating/smoothing geometry before ray-tracing (mentioned somewhere)

- Blurring the result (mentioned somewhere)

- A mysterious getSmoothP function in Houdini (“Returns modified surface position based on a smoothing function”)

Constant bias just sucks, similarly to shadowmapping bias. It’s a balance between wrong self-shadowing and peter-panning:

Shadow ray bias demonstration from 3dsmax documentation

Coplanar idea requires adjacent face data, and just doesn’t work well. Tweaking the value for one spot breaks the shadow on another:

Blurring the result will mess with desired shadow width and proper lighting gradients, so it’s a bad idea. Tessellating real geometry is fun but slow.

What really made my brain ticking is the Houdini function. What is smooth position? Can we place samples as they were on a round object, not a faceted one? Turns out, we can. Meet Phong Tessellation (again):

It is fast, it doesn’t require knowledge of adjacent faces, it makes up a plausible smooth position based on smooth normal. It’s just what we need.

Instead of actually tessellating anything, we can compute modified position on a fragment level during GBuffer drawing. Geometry shader can be used to output 3 vertex positions/normals together with barycentric coordinates down to the pixel shader, where Phong Tessellation code is executed.

Note that it should be only applied to “convex” triangles, with normals pointing outwards. Triangles with inwards pointing normals don’t exhibit the problem anyway, and we never want sample points to go inside the surface. Using a plane equation with the plane constructed from the face normal and a point on this face is a good test to determine if you got modified position right, and at least flatten fragments that go the wrong way.

Simply using rounded “smooth” position gets us here:

Almost nice 🙂 But what is this little seam on the left?

Sometimes there are weird triangles with 2 normals pointing out, and one in (or the other way around) making some samples go under the face. It’s a shame, because it could produce an almost meaningful extruded position, but instead goes inside and we have to flatten it.

To improve such cases I try transforming normals into triangle’s local space, with one axis aligned to an edge, flattening them in one direction, transforming back and seeing if situation improves. There are probably better ways to do that. The code I wrote for it is terribly inefficient and was the result of quick experimentation, but it gets the job done, and we only need to execute it once before any lightmap rendering:

// phong tessellation

float3 projA = projectToTangentPlane(IN.worldPos, IN.worldPosA, IN.NormalA);

float3 projB = projectToTangentPlane(IN.worldPos, IN.worldPosB, IN.NormalB);

float3 projC = projectToTangentPlane(IN.worldPos, IN.worldPosC, IN.NormalC);

float3 smoothPos = triLerp(IN.Barycentric, projA, projB, projC);

// only push positions away, not inside

float planeDist = pointOnPlane(smoothPos, IN.FaceNormal, IN.worldPos);

if (planeDist < 0.0f)

{

// default smooth triangle is inside - try flattening normals in one dimension

// AB

float3 edge = normalize(IN.worldPosA - IN.worldPosB);

float3x3 edgePlaneMatrix = float3x3(edge, IN.FaceNormal, cross(edge, IN.FaceNormal));

float3 normalA = mul(edgePlaneMatrix, IN.NormalA);

float3 normalB = mul(edgePlaneMatrix, IN.NormalB);

float3 normalC = mul(edgePlaneMatrix, IN.NormalC);

normalA.z = 0;

normalB.z = 0;

normalC.z = 0;

normalA = mul(normalA, edgePlaneMatrix);

normalB = mul(normalB, edgePlaneMatrix);

normalC = mul(normalC, edgePlaneMatrix);

projA = projectToTangentPlane(IN.worldPos, IN.worldPosA, normalA);

projB = projectToTangentPlane(IN.worldPos, IN.worldPosB, normalB);

projC = projectToTangentPlane(IN.worldPos, IN.worldPosC, normalC);

smoothPos = triLerp(IN.Barycentric, projA, projB, projC);

planeDist = pointOnPlane(smoothPos, IN.FaceNormal, IN.worldPos);

if (planeDist < 0.0f)

{

// BC

edge = normalize(IN.worldPosB - IN.worldPosC);

edgePlaneMatrix = float3x3(edge, IN.FaceNormal, cross(edge, IN.FaceNormal));

float3 normalA = mul(edgePlaneMatrix, IN.NormalA);

float3 normalB = mul(edgePlaneMatrix, IN.NormalB);

float3 normalC = mul(edgePlaneMatrix, IN.NormalC);

normalA.z = 0;

normalB.z = 0;

normalC.z = 0;

normalA = mul(normalA, edgePlaneMatrix);

normalB = mul(normalB, edgePlaneMatrix);

normalC = mul(normalC, edgePlaneMatrix);

projA = projectToTangentPlane(IN.worldPos, IN.worldPosA, normalA);

projB = projectToTangentPlane(IN.worldPos, IN.worldPosB, normalB);

projC = projectToTangentPlane(IN.worldPos, IN.worldPosC, normalC);

smoothPos = triLerp(IN.Barycentric, projA, projB, projC);

planeDist = pointOnPlane(smoothPos, IN.FaceNormal, IN.worldPos);

if (planeDist < 0.0f)

{

// CA

edge = normalize(IN.worldPosC - IN.worldPosA);

edgePlaneMatrix = float3x3(edge, IN.FaceNormal, cross(edge, IN.FaceNormal));

float3 normalA = mul(edgePlaneMatrix, IN.NormalA);

float3 normalB = mul(edgePlaneMatrix, IN.NormalB);

float3 normalC = mul(edgePlaneMatrix, IN.NormalC);

normalA.z = 0;

normalB.z = 0;

normalC.z = 0;

normalA = mul(normalA, edgePlaneMatrix);

normalB = mul(normalB, edgePlaneMatrix);

normalC = mul(normalC, edgePlaneMatrix);

projA = projectToTangentPlane(IN.worldPos, IN.worldPosA, normalA);

projB = projectToTangentPlane(IN.worldPos, IN.worldPosB, normalB);

projC = projectToTangentPlane(IN.worldPos, IN.worldPosC, normalC);

smoothPos = triLerp(IN.Barycentric, projA, projB, projC);

planeDist = pointOnPlane(smoothPos, IN.FaceNormal, IN.worldPos);

if (planeDist < 0.0f)

{

// Flat

smoothPos = IN.worldPos;

}

}

}

}

Most of these matrix multiplies could be replaced by something cheaper, but anyway, here’s the result:

The seam is gone. The shadow is still having somewhat weird shape, but in fact it looks exactly like that even when using classic shadowmapping, so I call it a day.

However, there is another problem. An obvious consequence of moving sample positions too far from the surface is that they now can go inside another surface!

Meshes don’t intersect, but sample points of one object penetrate into another

Turns out, smooth position alone is not enough. This problem can’t be entirely solved with it. So on top of that I execute following algorithm:

- Trace a ray from flat position to smooth position

- If there is an obstacle, use flat, otherwise smooth

In practice it will give us weird per-texel discontinuities when the same triangle is partially smooth and flat. We can improve the algorithm further and also cut the amount of rays traced:

- Create an array with 1 bit per triangle (or byte to make thing easier).

- For every texel:

- If triangle bit is 0, trace one ray from real position to smooth position.

- If there is an obstacle, set triangle bit to 1.

- position = triangleBitSet ? flatPos : smoothPos

- If triangle bit is 0, trace one ray from real position to smooth position.

That means you also need triangle IDs in the GBuffer, and I output one into alpha of the smooth position texture using SV_PrimitiveID.

To test the approach, I used Alyx Vance model as it’s an extremely hard case for ray-tracing due to a heavy mismatch between low-poly geometry and interpolated normals. Here it shows triangles marked by the algorithm above:

Note how there are especially many triangles marked in the area of belt/body intersection, where both surfaces are smooth-shaded. And the result:

Left: artifacts using smooth position. Right: fixed by using flat position in marked areas.

Final look:

I consider it a success. There is still a couple of weird-looking spots where normals are just way too off, but I don’t believe it can improved further without breaking self-shadowing, so this is where I stopped.

I expect more attention to this problem in the future, as real-time ray-tracing is getting bigger, and games can’t just apply real tessellation to everything like in offline rendering.

Ray bias

Previously I mentioned a “bias” value in the context of a tiny polygon offset, also referred to as epsilon. Such offset is often needed in ray-tracing. In fact every time you cast a ray from the surface, you usually have to offset the origin a tiny bit to prevent noisy self-overlapping due to floating-point inaccuracy. Quite often (e.g. in OptiX samples or small demos) this value is hard-coded to something like 0.0001. But because of floats being floats, the further object is getting from the world origin, the less accuracy we get for coordinates. At some point constant bias will start to jitter and break. You can fix it by simply increasing the value to 0.01 or something, but the more you increase it, the less accurate all rays get everywhere. We can’t fix it completely, but we can solve the problem of adaptive bias that’s always “just enough”.

At first I thought my GPU is fried

The image above was the first time the lightmapper was tested on a relatively large map. It was fine near the world origin, but the further you moved, the worse it got. After I realized why it happens I spent a considerable amount of time researching, reading papers, testing solutions, thinking of porting nextafterf() to CUDA.

But then my genius friend Boris comes in and says:

position += position * 0.0000002

Wait, is that it? Turns out… yes, it works surprisingly well. In fact 0.0000002 is a rounded version of FLT_EPSILON. When doing the same thing with FLT_EPSILON, the values are sometimes exactly identical to what nextafterf() gives, sometimes slightly larger, but nevertheless it looks like a fairly good and cheap approximation. The rounded value was chosen due to a better precision reported on some GPUs.

In case we need to add a small bias in the desired direction, this trick can be expanded into:

position += sign(normal) * abs(position * 0.0000002)

Fixing UV seams

UV seams are a known problem and a widely accepted solution was published in the “Lighting Technology of The Last Of Us” before. The proposed idea was to utilize least squares to make texels from different sides of the seam match. But it’s slow, it’s hard to run on the GPU, and also I’m bad at maths. In general it felt like making colors match is a simple enough problem to solve without such complicated methods. What I went for was:

- [CPU] Find seams and create a line vertex buffer with them. Set line UVs to those from another side of the seam.

- [GPU] Draw lines on top of the lightmap. Do it multiple times with alpha blending, until both sides match.

To find seams:

- Collect all edges. Edge is a pair of vertex indices. Sort edge indices so their order is always the same (if there are AB and BA, they both become AB), as it will speed up comparisons.

- Put first edge vertices into a some kind of acceleration structure to perform quick neighbour searches. Brute force search can be terribly slow. I rolled out a poor man’s Sweep and Prune by only sorting in one axis, but even that gave a significant performance boost.

- Take an edge and test its first vertex against first vertices of its neighbours:

- Position difference < epsilon

- Normal difference < epsilon

- UV difference > epsilon

- If so, perform same tests on second vertices

- Now also check if edge UVs share a line segment

- If they don’t, this is clearly a seam

A naive approach would be to just compare edge vertices directly, but because I don’t trust floats, and geometry can be imperfect, difference tests are more reliable. Checking for a shared line segment is an interesting one, and it wasn’t initially obvious. It’s like when you have 2 adjacent rectangles in the UV layout, but their vertices don’t meet.

After the seams are found, and the line buffer is created, you can just ping-pong 2 render targets, leaking some opposite side color with every pass. I’m also using the same “conservative” trick as mentioned in the first chapter.

Because mip-mapping can’t be used when reading the lightmap (to avoid picking up wrong colors), a problem can arise if 2 edges from the same seam have vastly different sizes. In this case we’ll have to rely on the nearest neighbour texture fetch potentially skipping some texels, but in practice I never noticed it being an issue.

Results:

Even given some imperfections, I think the quality is quite good for most cases and simple to understand/implement comparing to least squares. Also we only process pixels on the GPU where they belong.

Final touches

- Denoising. A good denoiser can save a load of time. Being OK with a Nvidia-only solution I was lucky to use Optix AI denoiser, and it’s incredible. My own knowledge of denoising is limited to bilaterial blur, but this is just next level. It makes it possible to render with a modest amount of samples and fix it up. Lightmaps are also a better candidate for machine-learned denoising, comparing to final frames, as we don’t care about messing texture detail and unrelated effects, only lighting.

A few notes:

- Denoising must happen before UV seam fixing.

- Previously OptiX denoiser was only trained on non-HDR data (although input/output is float3). I heard it’s not the case today, but even with this limitation, it’s still very usable. The trick is to use a reversible tonemapping operator, here is a great article by Timothy Lottes (tonemap -> denoise -> inverseTonemap).

- Bicubic interpolation. If you are not shipping on mobile, there are exactly 0 reasons to not use bicubic interpolation for lightmaps. Many UE3 games in the past did that, and it is a great trick. But some engines (Unity, I’m looking at you) still think they can get away with a single bilinear tap in 2018. Bicubic hides low resolution and makes jagged lines appear smooth. Sometimes I see people fixing jagged sharp shadows by super-sampling during bake, but it feels like a waste of lightmapping time to me.

Left: bilinear. Right: bicubic.

Bakery comes with a shader patch enabling bicubic for all shaders. There are many known implementations, here is one in CUDA (tex2DFastBicubic) for example. You’ll need 4 taps and a pinch of maths.

Wrapping it up the complete algorithm is:

- Draw a UV GBuffer using (pseudo) conservative rasterization. It should at least have:

- Flat position

- Smooth position obtained by Phong Tessellation adapted to only produce convex surfaces

- Normal

- World-space texel size

- Triangle ID

- Select smooth or flat position per-triangle.

- Push positions outside of closed surfaces.

- Compute lighting for every position. Use adaptive bias.

- Dilate

- Denoise

- Fix UV seams

- Use bicubic interpolation to read the lightmap.

Final result:

Nice and clean

Bonus: mip-mapping lightmaps

In general, lightmaps are best left without mip-mapping. Since a well-packed UV layout contains an awful lot of detail sitting close to each other, simply downsampling the texture makes lighting leak over neighbouring UV charts.

But sometimes you may really need mips, as they are useful not only for per-pixel mip-mapping, but also for LODs to save memory. That’s the case I had: the lightmapper itself has sometimes to load all previously rendered scene data, and to not go out of VRAM, distant lightmaps must be LODed.

To solve this problem we can generate a special mip-friendly UV layout, or repack an existing one, based on the knowledge of the lowest required resolution. Suppose we want to downsample the lightmap to 128×128:

- Trace 4 rays, like in the leak-fixing pass, but with 1/128 texel size. Set bit for every texel we’d need to push out.

- Find all UV charts.

- Pack them recursively as AABBs, but snap AABB vertices to texel centers of the lowest resolution mip wanted. In this case we must snap UVs to ceil(uv*128)/128. Never let any AABB to be smaller than one lowest-res texel (1/128).

- Render using this layout, or transfer texels from the original layout to this one after rendering.

- Generate mips.

- Use previously traced bitmask to clear marked texels and instead dilate their neighbours inside.

Because of such packing, UV charts will get downsampled individually, but won’t leak over other charts, so it works much better, at least for a nearest-neighbour lookup. The whole point of tracing the bitmask and dilating these spots inside is to minimize shadow leaking in mips, while not affecting the original texture.

It’s not ideal, and it doesn’t work for bilinear/bicubic, but it was enough for my purposes. Unfortunately to support bilinear/bicubic sampling, we would need to add (1/lowestRes) empty space around all UV charts, and it might be too wasteful. Another limitation of this approach is that your UV chart count must be less than lowestMipWidth * lowestMipHeight.

P.S.

Top ways to annoy me:

- Don’t use gamma correct rendering.

- Ask “will it support real-time GI?”

- Complain about baking being slow for your 100×100 kilometers world.

- Tell me how lightmaps are only needed for mobile, and we’re totally in the high quality real-time GI age now.

- Say “gigarays” one more time.

+1 from a guy who is also making a third party GPU lightmapper, but for Unreal. (see https://forums.unrealengine.com/development-discussion/rendering/1460002-luoshuang-s-gpulightmass). At that time I didn’t do much about artifacts in lightmap space so compared to yours my lightmapper produces constant horrible artifacts lol. Most of my time went into writing a fast ray tracer (OptiX is too slow – much slower than OptiX Prime however Prime doesnt support evaluating masks in ray traversal so finally turned out I made my own) and efficient GI solver (variance-based ray guiding and etc). I’m wondering somehow to combine the two parts so we can have the best lightmapper in the world lol.

I saw your thread on the Unreal forum, impressive work!

My GI solver is much simpler than yours: every bounce is just reflecting previously baked lighting using a uniform hemisphere of rays. Something that’s only possible in the lightmapping world, as we have nice scene parametrization to cache our lighting.

I had suspected OptiX is not doing the fastest job… but even with current speed it still beats everything I know. What are you ray traversal secrets? 🙂 Are you using TRBVH or another structure?

Looks like we are using the same ‘radiosity caching’ idea lol – caching in the lightmaps. Before I came to Epic I thought cosine distributed rays are bad because once strong lighting comes from near horizon it would be very bad. However then I learnt that people never use it alone. It is usually combined with explicit skylight cubemap sampling + ray clamping firefly rejection. This 3 combination is better than the 3 with cosine being replaced by uniform.

For acceleration structure, TRBVH is known for relatively good traversal perf and fast construction time on GPU. SBVH is still the best one for speed – and since embree has provided helper API that lets you build SBVH with any branching factor very quickly. However, while there is a speed difference between TRBVH and SBVH, I believe the improvement is marginal. The two things I did, one is sorted tracing. Unlike path tracing, since all the materials are diffuse, we can sort the lightmap texels rather than rays and still get ‘sorted’ performance. Another ome is, I believe the reason why my raytracer is fast is simply because its simple lol – traverses and evaluates masks, doesn’t do proper instancing lol. When I finally add instancing, I believe it should be on par with Prime.

Caching in the lightmaps has one problem of possibly going out of VRAM. That’s why I had to also invent some mip-mapping tricks, so I don’t need crazy amount of memory to bake large worlds. There is an interesting preprocess step I didn’t describe in the article when I throw some rays from one lightmap to another and check texel size at the hit to determine which mip level would suffice for GI.

Did Epic hire you because of the lightmapper? 😀

Skylight: I always calculate it separately. In fact my GI code and all lights are completely isolated and know nothing about each other, sort of feels like deferred rendering. First all lights are blended additively, then GI bounces everything. Therefore skylight and GI have independent sample counts, and I usually set more for the sky. To prevent fireflies I just downsample sky cubemaps to 256×256. In all offline renderers I know preconvolved/lowres HDRIs produce much better results with less sample counts than hi-res.

BTW, when I said “uniform hemisphere”, I actually meant cosine-weighted.

Interesting stuff about traversal 🙂 I don’t even path-trace – I split everything to separate passes, so there is never even a situation when one ray spawns another.

Nor do I path trace either lol – I’m also doing radiosity iteration. However in each iteration texels are sorted and I use stratified sampling so they are guaranteed to trace into the same direction. I do multiple iterations with low res, then with a final high res pass – known as ‘Final Gathering’, which is what the ‘brute force’ primary GI engine refers to. I use the same GBuffer idea – UE4’s default Lightmass also, but it does CPU software rasterization. For skylight chances are if you don’t build a PDF (or some simpler forms like extract the 10 brightest texels and sample them separately which is what I’m doing now lol – this is equivalent to ‘max heurestic’ multiple importance sampling rather than the commonly used ‘balance heuristic’) to importance sample according to luminance you may get strong fireflies typically from the sun disk. Prefiltering (downsampling) to get them away is always a good idea, though sometimes (actually at most of the time according to my test) you may lose some details.

For the Epic question, I believe the most direct reason is I got an internship at Epic last year lol.

By sorting, do you mean sorting destination hit positions, so there are less cache misses in the sample loop, or something else?

The more traditional style sorting is this – http://www.andyselle.com/papers/20/sorting-shading.pdf. Here I specifically mean sorting the rays so they have much coherent memory access behavior during BVH traversal thus much better traversal performance. Sorting the destination hits is also good, especially when you have complex materials to evaluate as the paper points out, but in our lightmapping case it isn’t very useful. To extend the sorting idea to an extreme, we get ‘breadth-first ray traversal’.

In some cases like using stratified sampling, the rays are naturally aligned, so sorting won’t be much useful.

Hmm interesting… I thought of something similar, but the amount of data needed to represent all rays and the fact it’s hard to pull of on the GPU made me abandon the idea.

Yes, and then I noticed we can actually sort the texels and then do aligned tracing like a stratified one to ‘simulate’ the behavior to some degree, which makes me very happy.

However if your lightmapped objects are mostly rectangular, you actually dont need to sort – they are naturally sorted lol

I think I’m starting to understand… you can sort the GBuffer into patches of nearby texels and then also split rays for these texels by different parts of the hemisphere. Not sure if I got it completely right, but sounds like that should be more coherent…

I also encountered all of your problems in my lightmapper https://motsd1inge.wordpress.com/2015/07/13/light-rendering-on-maps/, even the shadow terminator in a different context though, I thought there were no solution. The Houdini stuff made me literally send my arms to the ceiling.

I’ll be sure to check your project more. Why do you refund your clients ? was the income so ridiculous so that you don’t care at all ? Awesome job. thanks a lot for the writeup.

Hi, nice article 🙂 There were no refunds so far. Asset store allows doing that, but nobody did.

Yes sorry I got confused with SEGI as I was skimming 2 things at once. https://forum.unity.com/threads/segi-fully-dynamic-global-illumination.410310/page-2 the guy is refunding I don’t get why.

But anyway back to tech, I never even hoped that lightmaps could be clean on a model like Alix, it’s like you did “nobody told me it was impossible so I did it”

I found this article very funny, like an adventure, even though I didn’t know 1/3 of the terms, also learned something.

Is it possible to use the lightmaps (and vertex colors, I would definitely use that feature) that this program bakes for other engines than Unity? Been looking for an alternative to Lightmass in UE for a long time, and the way I am making my art will support external lightmaps. Would definitely give this a try if it is possible. Or are you considering releasing a standalone at some point?

Bakery core is engine-agnostic, I actually guided one developer with a completely custom engine through the integration process, and it was successful 🙂 There is a lot of glue code between Unity and the lightmapper though, especially given the amount of features and corner cases Unity has. Integrating it to UE is possible, but similarly a lot of work, I guess. As for official standalone release – yes, I’m planning it. Theoretically I could do it any time, but realistically it’s only useful with very detailed documentation.

Very cool! I think I’ll patiently wait until you decide upon a standalone release. Very awesome work, by the way!

Thanks! I don’t have any ETA on standalone though, so if you have any deadlines, maybe get in touch with me via skype and I’ll try to help you out.

Would love to have this product available for Unreal 4. I would even go as far as providing payment / funding if you were interested. Hit me up if you are!

Glad you like it! However the amount of code it took to adapt it to Unity with all its features and quirks is insane. Probes, LODs, terrains, atlasing, making sure batching doesn’t break, UI… I really don’t have time or resources to repeat it for Unreal, I barely handle all support requests from existing users.

Hi there,

I am stuck in a situation which I would like to discuss, maybe you can help.

I have Baked a scene with bakery, and I exported the Scene from Unity without Bakery plugin selected, but the exported scene have the light maps. Now When I start the new Unity project and call this exported asset in, It do not load the light maps. For this I have to import the bakery plugin again.

Is it possible to load the baked light maps without adding bakery plugin in the new project?

I will appreciate any help.

Thanks

Hi Qaisar,

The best place to discuss problems like this is the official forum: https://forum.unity.com/threads/bakery-gpu-lightmapper-v1-55-released.536008

In your case, please read the FAQ: https://docs.google.com/document/d/19ZDUAVJA69YHLMMCzc3FOneTM5IfEGeiLd7P_qYfJ9c/edit

Check “How do I share a scene with someone who doesn’t have Bakery installed?” question.

Hello Mr F, Thanks for your post, I am writing a lightmapper for our custom engine using DXR, and this post helped me a lot to get rid of all those ugly artifacts.

May I ask how do you bake light probes on the GPU? or are they done on the CPU side only?

Thanks

Hi Mike 🙂

I bake light probes just like lightmaps (having them laid in a similar UVGBuffer), except they don’t have any geometry in the scene and cast a full sphere of uniform rays instead of a normal-oriented hemisphere. Results are projected into SH basis and saved as a texture per coefficient. I then read these textures and get full SH.

Hi Mr F.

May I ask how do you deal with LOD objects, just bake N lightmap for N LOD? or some other methods.

I am working on a GPU lightmapper now, I try to bake single lightmap for the highest LOD,and mapped it to other LODs.

however,this method yield many artifacts,because of the unreliable mapping function….

I have to split different LODs into different lightmap atlases so I can launch lightmapping kernels for them separately with different scene geometry. LODs only “see” objects of the same (or closest) detail level (+ non-LOD part of the scene).