1. Introduction

- We propose an additional module for the YOLOv5 to improve the detection performance for small faces. The prediction head is designed to receive a low level (thus, a high-resolution) feature map.

- To boost the multi-scale face detection performance, a Multi-Scale Image Fusion (MSIF) layer is proposed to effectively learn multi-scale faces.

- To diversify face objects with various scales in training the DNN, a Copy-Paste technique for the face object is proposed.

- Within the category of the speed-focusing face detectors, the proposed method has achieved state-of-the-art (SOTA) performance. Specifically, the proposed FAFD-Small achieved 95.0%, 93.5%, and 87.0% APs in Easy, Medium, and Hard sub-datasets of WiderFace [7] dataset, respectively. Also, our FAFD-Nano model achieved 93.0%, 91.1%, and 84.1% APs in Easy, Medium, and Hard sub-datasets, respectively. These results are the best ones among the speed-focusing methods.

2. Related Work

2.1. Accuracy-Focusing Face Detection

2.2. Speed-Focusing Face Detection

3. Proposed Methods

3.1. Overall Structure of the Proposed Framework

3.2. Additional Prediction Head for Small Faces

3.3. Multi-Scale Image Fusion

- Resize a W × H input image to form an image pyramid with the size of {}.

- Feature maps are extracted by applying the CBS (Convolution + Batch Normalization + SiLU) layer as shown in Figure 2a to the three resized images in (i). In the figure, k, s, and p represent kernel size, stride, and padding, respectively. The size of the extracted feature map becomes half of each image pyramid.

- The feature map of (iii) is up-sampled by a bi-linear interpolation. Then, before passing it to the next layer, a pixel-wise addition is performed with a medium-level feature map in (iii).

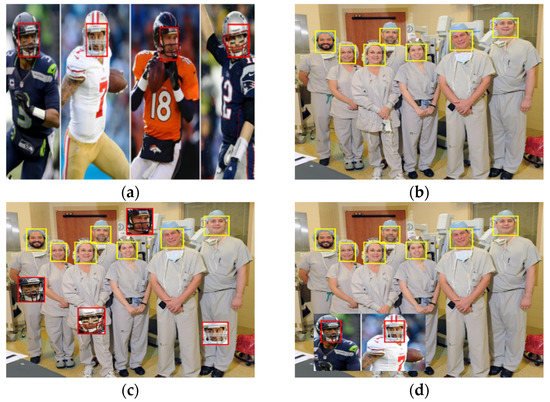

3.4. Copy-Paste Augmentation for Face

- (i)

- The reference image for the original image , is chosen at random from the training dataset.

- (ii)

- Get from .

- (iii)

- is randomly determined within the experimentally defined range of [0.1, 0.4].

- (iv)

- is obtained by randomly cropping an area of size (, ) from the reference image .

- (v)

- Using the bounding box information of the face objects in the reference image, check if the cropped region contains any face object. Specifically, if the cropped contains a center point of the bounding box for the face, proceed to (vi). Otherwise, return to (iii) for re-cropping.

- (vi)

- In this step, we need to check if the cropped includes any other body parts such as the shoulder as well as the face. To this end, we employ a simple method of comparing the sizes of the face bounding boxes in with the size of . So, if widths and heights of all bounding boxes in are less than 60% than those of , then we proceed to (vii). Otherwise, we assume that consists mainly of the facial region and we return (iii) for re-cropping. Note that the thresholding value of 60% is determined experimentally.

- (vii)

- is randomly determined in the experimentally defined range of [0.5, 1.5].

- (viii)

- Resize by the scale factor .

- (ix)

- Paste into in the non-overlapping region with the existing face region.

4. Results

4.1. Dataset

4.2. Experiments

4.3. Ablation Study

4.4. Comparative Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kumar, A.; Marks, T.K.; Mou, W.; Wang, Y.; Jones, M.; Cherian, A.; Koike-Akino, T.; Liu, X.; Feng, C. LUVLi Face Alignment: Estimating Landmarks’ Location, Uncertainty, and Visibility Likelihood. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8236–8246. [Google Scholar]

- Ning, X.; Duan, P.; Li, W.; Zhang, S. Real-time 3D face alignment using an encoder-decoder network with an efficient deconvolution layer. IEEE Signal Processing Lett. 2020, 27, 1944–1948. [Google Scholar] [CrossRef]

- Chang, J.; Lan, Z.; Cheng, C.; Wei, Y. Data Uncertainty Learning in Face Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 5710–5719. [Google Scholar]

- Kim, Y.; Park, W.; Roh, M.-C.; Shin, J. Groupface: Learning Latent Groups and Constructing Group-Based Representations for Face Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 5621–5630. [Google Scholar]

- Anzalone, L.; Barra, P.; Barra, S.; Narducci, F.; Nappi, M. Transfer Learning for Facial Attributes Prediction and Clustering. In Proceedings of the 7th International Conference on Smart City and Informatization, Guangzhou, China, 12–15 November 2019; pp. 105–117. [Google Scholar]

- Karkkainen, K.; Joo, J. FairFace: Face Attribute Dataset for Balanced Race, Gender, and Age for Bias Measurement and Mitigation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 1548–1558. [Google Scholar]

- Yang, S.; Luo, P.; Loy, C.-C.; Tang, X. Wider Face: A Face Detection Benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5525–5533. [Google Scholar]

- Earp, S.W.; Noinongyao, P.; Cairns, J.A.; Ganguly, A. Face detection with feature pyramids and landmarks. arXiv 2019, arXiv:1912.00596. [Google Scholar]

- Deng, J.; Guo, J.; Zhou, Y.; Yu, J.; Kotsia, I.; Zafeiriou, S. Retinaface: Single-stage dense face localisationd in the wild. arXiv 2019, arXiv:1905.00641. [Google Scholar]

- Qi, D.; Tan, W.; Yao, Q.; Liu, J. YOLO5Face: Why Reinventing a Face Detector. arXiv 2021, arXiv:2105.12931. [Google Scholar]

- Zhang, B.; Li, J.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Xia, Y.; Pei, W.; Ji, R. Asfd: Automatic and scalable face detector. arXiv 2020, arXiv:2003.11228. [Google Scholar]

- Zhang, S.; Chi, C.; Lei, Z.; Li, S.Z. RefineFace: Refinement Neural Network for High Performance Face Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4008–4020. [Google Scholar] [CrossRef] [PubMed]

- Tang, X.; Du, D.K.; He, Z.; Liu, J. Pyramidbox: A Context-Assisted Single Shot Face Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 797–813. [Google Scholar]

- Li, Z.; Tang, X.; Han, J.; Liu, J.; He, R. Pyramidbox++: High performance detector for finding tiny face. arXiv 2019, arXiv:1904.00386. [Google Scholar]

- Najibi, M.; Samangouei, P.; Chellappa, R.; Davis, L.S. Ssh: Single Stage Headless Face Detector. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4875–4884. [Google Scholar]

- Zhang, S.; Zhu, X.; Lei, Z.; Shi, H.; Wang, X.; Li, S.Z. S3fd: Single Shot Scale-Invariant Face Detector. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 192–201. [Google Scholar]

- Li, J.; Wang, Y.; Wang, C.; Tai, Y.; Qian, J.; Yang, J.; Wang, C.; Li, J.; Huang, F. DSFD: Dual Shot Face Detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5060–5069. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Zhao, X.; Liang, X.; Zhao, C.; Tang, M.; Wang, J. Real-time multi-scale face detector on embedded devices. Sensors 2019, 19, 2158. [Google Scholar] [CrossRef] [PubMed]

- Jocher, G.; Stoken, A.; Chaurasia, A.; BoroVec, J.; Kwon, Y.; Michael, K.; Changyu, L.; Fang, J.; Abhiram, V.; Skalski, P.; et al. Ultralytics/yolov5: V6. 0—YOLOv5n ‘Nano’ models, Roboflow integration, TensorFlow export, OpenCV DNN support. Zenodo Tech. Rep. 2021. [Google Scholar] [CrossRef]

- Feng, Y.; Yu, S.; Peng, H.; Li, Y.-R.; Zhang, J. Detect Faces Efficiently: A Survey and Evaluations. arXiv 2021, arXiv:2112.01787. [Google Scholar] [CrossRef]

- Yashunin, D.; Baydasov, T.; Vlasov, R. MaskFace: Multi-Task Face and Landmark Detector. arXiv 2020, arXiv:2005.09412. [Google Scholar]

- Chi, C.; Zhang, S.; Xing, J.; Lei, Z.; Li, S.Z.; Zou, X. Selective Refinement Network for High Performance Face Detection. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8231–8238. [Google Scholar]

- Liu, W.; Liao, S.; Ren, W.; Hu, W.; Yu, Y. High-Level Semantic Feature Detection: A New Perspective for Pedestrian Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5187–5196. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into High Quality Object Detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Single-Shot Refinement Neural Network for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4203–4212. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, S.; Zhu, X.; Lei, Z.; Shi, H.; Wang, X.; Li, S.Z. Faceboxes: A CPU Real-Time Face Detector with High Accuracy. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 1–9. [Google Scholar]

- Yu, S. libfacedetection.train; GitHub: San Francisco, CA, USA, 2020; Available online: https://github.com/ShiqiYu/libfacedetection.train (accessed on 6 February 2022).

- Linzaer. Ultra-Light-Fast-Generic-Face-Detector-1MB; GitHub: San Francisco, CA, USA, 2019; Available online: https://github.com/Linzaer/Ultra-Light-Fast-Generic-Face-Detector-1MB (accessed on 6 February 2022).

- Jin, H.; Zhang, S.; Zhu, X.; Tang, Y.; Lei, Z.; Li, S.Z. Learning Lightweight Face Detector with Knowledge Distillation. In Proceedings of the 2019 International Conference on Biometrics (ICB), Crete, Greece, 4–7 June 2019; pp. 1–7. [Google Scholar]

- He, Y.; Xu, D.; Wu, L.; Jian, M.; Xiang, S.; Pan, C. LFFD: A light and fast face detector for edge devices. arXiv 2019, arXiv:1904.10633. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Dvornik, N.; Mairal, J.; Schmid, C. Modeling Visual Context Is Key to Augmenting Object Detection Datasets. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 364–380. [Google Scholar]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.-Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple Copy–Paste Is a Strong Data Augmentation Method for Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2918–2928. [Google Scholar]

- Dwibedi, D.; Misra, I.; Hebert, M. Cut, Paste and Learn: Surprisingly Easy Synthesis for Instance Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1301–1310. [Google Scholar]

- Kisantal, M.; Wojna, Z.; Murawski, J.; Naruniec, J.; Cho, K. Augmentation for small object detection. arXiv 2019, arXiv:1902.07296. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond Empirical Risk Minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Zitnick, C.L.; Dollár, P. Edge boxes: Locating Object Proposals from Edges. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 5–12 September 2014; pp. 391–405. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. K-Means++: The Advantages of Careful Seeding; Stanford: Stanford, CA, USA, 2006. [Google Scholar]

| Used Layer | Size of Anchor Box | ||

|---|---|---|---|

| Baseline | |||

| First detection layer | (72, 94) | (130, 170) | (229, 304) |

| Second detection layer | (16, 21) | (26, 33) | (43, 55) |

| Third detection layer | (4, 5) | (7, 9) | (11, 14) |

| Proposed FAFD | |||

| First detection layer | (117, 152) | (167, 218) | (249, 340) |

| Second detection layer | (32, 40) | (46, 63) | (69, 97) |

| Third detection layer | (12, 14) | (16, 21) | (22, 29) |

| Fourth detection layer | (4, 5) | (6, 7) | (8, 10) |

| Method | WiderFace Validation Set | |||||

|---|---|---|---|---|---|---|

| Baseline | MSIF | Copy-Paste | P2 | APEasy | APMedium | APHard |

| ✓ | 94.9 | 93.1 | 84.2 | |||

| ✓ | ✓ | 95.0 | 93.4 | 85.5 | ||

| ✓ | ✓ | 95.0 | 93.3 | 84.2 | ||

| ✓ | ✓ | 94.9 | 93.2 | 85.7 | ||

| ✓ | ✓ | ✓ | 94.8 | 93.3 | 86.9 | |

| ✓ | ✓ | ✓ | ✓ | 95.0 | 93.5 | 87.0 |

| Method | WiderFace Validation Set | |||||

|---|---|---|---|---|---|---|

| Baseline | MSIF | P2 | APSmall | APMedium | APLarge | APAll |

| ✓ | 78.4 | 94.7 | 94.9 | 83.3 | ||

| ✓ | ✓ | 80.4 | 94.8 | 95.0 | 84.8 | |

| ✓ | ✓ | 80.5 | 94.7 | 92.8 | 85.1 | |

| ✓ | ✓ | ✓ | 82.3 | 94.6 | 92.1 | 86.4 |

| Model | #Params (×106) | AVG GFLOPs | WiderFace Validation Set | AVG Latency (ms) | |||

|---|---|---|---|---|---|---|---|

| APEasy | APMedium | APHard | AVG Latency (ms) | Device | |||

| FaceBoxes [30] | 1.013 | 1.5 | 84.5 | 77.7 | 40.4 | 23.68 | INTEL i7-5930K |

| YuFaceDetectNet [31] | 0.085 | 2.5 | 85.6 | 84.2 | 72.7 | 56.28 | |

| ULFG-slim-320 [32] | 0.390 | 2.0 | 65.2 | 64.6 | 52.0 | 21.4 | |

| ULFG-slim-640 [32] | 81.0 | 79.4 | 63.0 | ||||

| ULFG-RFB-320 [32] | 0.401 | 2.4 | 68.3 | 67.8 | 57.1 | 23.17 | |

| ULFG-RFB-640 [32] | 81.6 | 80.2 | 66.3 | ||||

| LFFD-v2 [34] | 1.520 | 37.8 | 87.5 | 86.3 | 75.2 | 185.17 | |

| LFFD-v1 [34] | 2.282 | 55.6 | 91.0 | 88.0 | 77.8 | 239.43 | |

| FAFD-Nano (Ours) | 1.808 | 8.3 | 93.0 | 91.1 | 84.1 | 94.4 | INTEL i7-7700K |

| FAFD-Small (Ours) | 7.193 | 29.3 | 95.0 | 93.5 | 87.0 | 207.1 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).